Living side-by-side with an AI

2022 is, undoubtedly, the year of the AI. It started with DALL-E, then GitHub Copilot, and now—ChatGPT. AI is here to stay, whether you like it or not, and we ought to find a way to live with it.

Earlier this month, the OpenAI team released ChatGPT—a GPT-3 based AI chat assistant that can do a lot of things like drafting a blog post idea, writing essays, explaining various topics, writing code etc. I’ve shared my initial impression about it in my previous blog post—ChatGPT, AI, and the future of tech. In the past week, I’ve used ChatGPT to perform some mundane tasks, which I usually use Google for. In this post, I want to raise some questions on how we, humans, are going to co-exist with AI.

Immediate impact of ChatGPT

In my previous post, I said that I don’t think software engineers or content creators will lose their jobs, at least not in the near future. I still stand behind this opinion. However, as with any technological progress, there are those who are going to be impacted—for good and for bad.

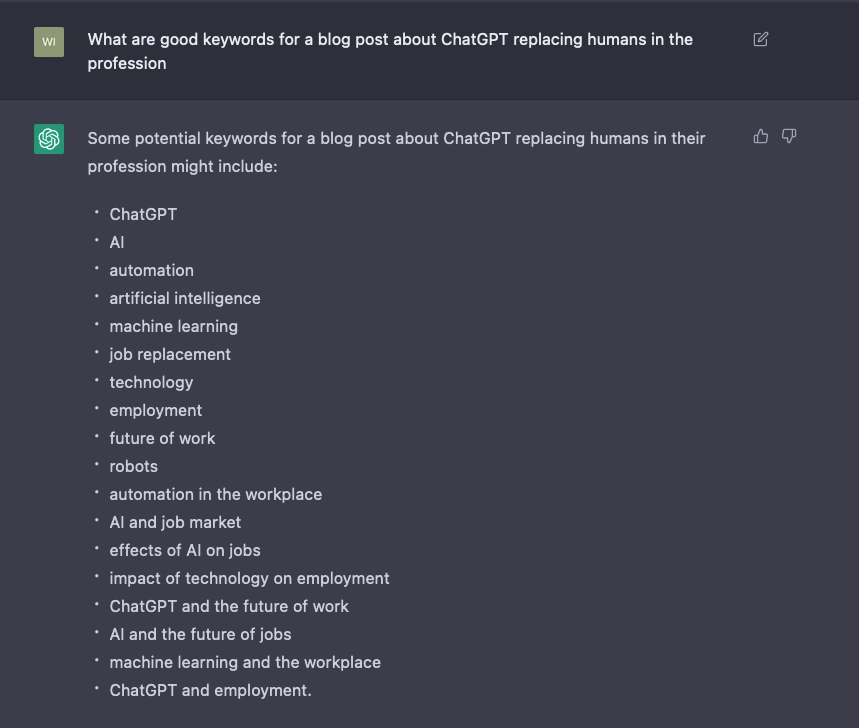

When the assembly line was introduced, and later on—perfected with automation, people who used to do what robots do now—lost their jobs. That’s the price we pay for technological advancement. Mundane, and repetitive jobs—are always the first in–line to be replaced. Things like low quality content production for SEO purposes, or researching keywords for blog posts. All these can be easier done by ChatGPT with minor corrections from a human expert.

Yet, there are always people who look for low-hanging fruits. In today’s world—content is highly rewarded. No matter what niche you take, be it software engineering or self development, the successful people in that niche produce content. Blog posts, podcasts, YouTube videos, books, courses etc. You can say we do it for ourselves, but the truth is—we all, including myself, want to share our content with the world. Those who write for themselves—keep a journal, not a blog. We all do it for different reasons. Some to learn, others to teach. Some to share with others, others to enrich themselves. I’m not the one to judge, and I believe people should be paid for their knowledge—if they desire so.

However, the thing with new content producers is they think it’s easy. Just slap in some words in a text editor and publish it to WordPress, or film yourself talking to a camera and upload to YouTube. But in reality—content production is a hard job that involves not only the ability to write or speak, but also the ability to distribute your content and build an audience.

And while most of the creators take the hard route of producing content, there are people who will always try to find shortcuts. Like with money making—some people go to work every day, while others try to set up a get-rich-fast scams. And the big beneficiaries of AI technologies today—are those who are looking for shortcuts.

I’m relatively active in communities like HackerNews and Reddit, and I can see the explosion of ChatGPT content on those platforms. I even had an answer to one of my questions, copy–pasted from ChatGPT. Granted, the author of the answer disclosed he/she used ChatGPT. Notion, the highly popular note–taking app, that I no longer use, recently released Notion AI—an ability to generate content right inside the editor itself, leveraging GPT-3 model. They did not work on the countless issues that their users have with the software, such as lack of offline support or E2EE—instead, they’ve put their efforts into AI.

And it’s a smart move because I believe there will be a huge demand for AI, due to the important role of content creation in our current society. Websites, like StackOverflow, have now implemented a temporary policy to ban ChatGPT generated content. Getty bans AI generated content. And I’ve read somewhere that Google is working on, or already have, a policy to punish websites that use primarily AI generated content. I can’t find the source, so don’t quote me on that.

The human touch

From all the examples I’ve seen, and based on my personal experience—ChatGPT is often times wrong. It gets a lot of the things right, but usually make a mistake here and there. And this is one of the dangers of using its raw output. A person, who has no understanding of the topic, can not effectively use ChatGPT. I can ask ChatGPT how a nuclear bomb works, but I’m not a nuclear scientist to verify that the answer is correct. I can, however, go on Quora or Reddit and spam answers at a rate of one per minute, hoping that 90% of them will be correct—while ripping the benefits.

The power of communities like StackOverflow or Reddit—is the positive feedback loop we get from our fellow humans. The ability to audit a solution, or provide multiple answers from multiple people—is priceless in the world of self development and knowledge acquiring. People make mistakes—that’s why we have protocols in place, as well as fellow human beings, to correct our mistakes. ChatGPT is free, for now. If I want to read AI generated content, I can use ChatGPT directly. And so, flooding the internet or the above communities with AI generated content, makes it harder to maintain the quality of the content as well as getting real human answers that come from their personal experience and knowledge.

What is creativity?

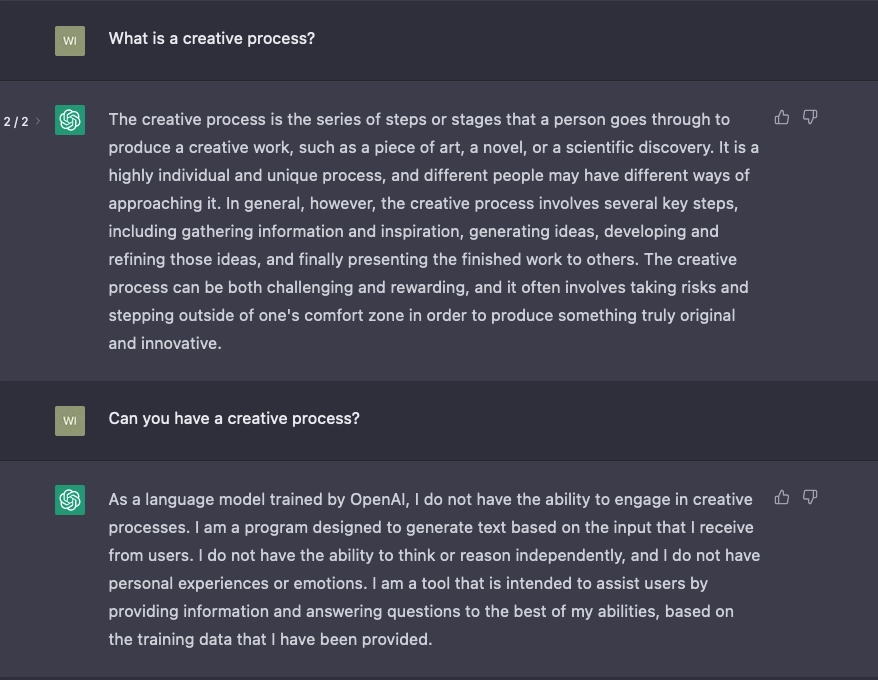

When we talk about AI generated content, we need to talk about creativity. If we put aside for a moment, the fact that ChatGPT produces inaccurate content in most cases, one could ask then—what’s the difference between a content written by a human and a content created by a machine?

Creative process is not something that can be described mathematically. Humans, usually, describe their creative process as a-ha! moments. Something like: I was taking a shower, while thinking about the solution to that problem, and then it hit me! I need to use JavaScript!

We then go on and do research on that topic, by reading materials, and eventually summarizing the entire process in a unit of production—be it a system design, a piece of code or art. If we look beyond technical fields, and gravitate towards more creative fields like writing or singing—we can learn that many writers or poets produce content based on their personal experience and background. A complicated child might become a rapper who will rap about his though childhood, which might resonate with children in a similar situation. A WW1 veteran—might write about his horrible experience during the war, as a way to ease his pain and help him cope with that experience, while passing onto the reader the horrors of those events.

The word creativity in itself is associated with something human. AI has no experience. It has no notion of past. It’s not having childhood traumas. Nor it can think like a human can. Therefor, I believe it has no creativity—it can only remaster. And there is something humanly when we look at art or read books and can feel the same things the artist or the author were feeling. I can’t have sympathy towards machine. I usually kick my robot vacuum cleaner when it’s stuck. Please don’t call the robots abuse hot line.

Something from nothing

One could, rightfully, ask—Humans rarely create anything from nothing. How is that different from an AI?

I do want to point out that there are great discoveries made by humans. My point is not to say that all we create—is a remix of existing things. But as I’ve said earlier, most of what we do create—is based on past knowledge and experience. A student of Rembrandt will be inclined to create art in a similar style to his teacher—unless he or she decides to explore other styles or artists. The same goes to all other types of content creation. What I write—is based on what I read and the experience I have.

The difference between me and AI—is that I have context and understanding. I know that 2+2=4. A good mathematician, which I am not, also knows why. AI—does not. It simply fed this fact into its neural network. If you feed it with another fact, it will produce answers based on that fact. This creates a negative feedback loop. I’ll talk a bit later about the negative feedback loop.

What is copyright?

When we talk about creativity and remastering—we have to talk about copyright. As I’ve said in the beginning of this post—2022 is the year of the AI. During a very short period of time, something like 3–4 months, we’ve gone from closed-behind-doors-deep-learning-ai-that-beats-humans-in-go-or-chess, towards AI that generates art, code, and poems.

But you know what’s missing? AI that generate music or videos. Yes, there is OpenAI Jukebox, but it didn’t get as much attention as DALL-E, Stable Diffusion, or ChatGPT. While the results of it are amazing—they are not on the same level as other AI tools. And there is no, yet, AI that can generate video content such as movies. I think it’s related to the fact, that existing AI models are trained on existing data. Access to royalty free music or films—is limited. GitHub copilot was trained on public GitHub repositories, while ChatGPT was trained on crawling the internet. Those are huge datasets. GitHub has nearly 128 million of public repositories; while the GPT-3 model was trained on nearly 400 billion websites.

I don’t believe knowledge or art should be safeguarded, unless the author decides so. But I do believe that people have the right to retain copyrights for their work. I’m not a copyright lawyer, so I don’t know exactly where is the line between original work and remixed work. But I know for sure that I can not publish a YouTube video with Britney Spears song in it. This video will be removed, for respect to Britney’s copyright. The same respect that other authors, or open source developers—have. Is it right to train an AI model on an open source project, just because it’s open source? Is it right to train an AI model on a website, just because it’s publicly available? Is it moral to charge money for an app that uses an AI model, which is, most likely, trained on a publicly available art, that generates avatars for you—without giving credit or attribution back to the artists that “inspired” the AI model?

I don’t know. I know there is a lawsuit against copilot. And I know that we must have a discussion as to how we should train those AI models. The fact that some datasets used to train the models—are public, does not mean it can be used without implications, credits, or attributions. The fact that one owns a bunch of legally obtained DVDs with movies, does not grant them the right to open a private movie theater. Images, articles, and blogs posts—sometimes have licenses. And those licenses should be respected by humans as well as AI. And unless we start to raise awareness for this issue, and have an open discussion—we are, potentially, violating the rights of content producers and artists.

Negative Feedback Loop

I’ve mentioned it earlier, and I want to expand on this topic. In one of my previous posts, The software industry is broken, I’ve discussed the fact that software today—is not as optimized as it used to be. One of the reasons I’ve outlined—is the fact that writing software became easier, and in a way dumber. Developers today don’t need to know how memory is managed. There are high level languages that manage it for you. And so, as tools become more available to the masses—they’re ought to be simplified. And simplification, often times, leads to loss or deterioration of knowledge.

A similar thing can, and will, happen to our knowledge if we do not control the spread of AI content. AI is great today because of two reasons—(1) the engineers behind it and (2) the entire knowledge of human beings. Every time an AI generates a piece of code, art, or a text paragraph—it’s because there is work made or knowledge shared by humans, that the AI was able to train on.

I’ve said earlier that, as of today, ChatGPT gets most of the things right, and some things wrong. If we don’t set up protocols in place to distinguish human generated content from AI generated content, AI generated content will flood the internet. Look at how the standards of beauty have changed, because everyone is posting unrealistic photos of themselves, by using a bunch of photo retouching, auto-magical, tools.

There are people who embrace the easy model of producing free content. And by flooding the internet with potentially inaccurate content, we will not only bias the future training process of AI—we will damage the future knowledge of human beings.

The real danger is not that computers will begin to think like men, but that men will begin to think like computers.

— Sydney J. Harris

Conclusion

I tend to think of myself as a realist. Although, if you ask some of the people who know me well—they might say I’m leaning towards pessimism. AI is here. We’ve opened that box. As with every tool ever created by human beings—it can be used for good, and it can be used for bad. People, usually, tend to focus on the good. I’m not going to unwrap my entire philosophical views here, but I tend to, typically, focus on the bad.

We reward content creation. Aside from Google, the 4 most visited websites are YouTube, Facebook, Twitter, and Instagram (source); and the 3 most downloaded apps are TikTok, Instagram, and Facebook. Those are all content creation tools. I’ve read somewhere that today’s kids, when you ask them who they want to be when they grow up, answer a YouTuber or a TikTok/Instagram influencer. And we value knowledge.

ChatGPT, DALL-E, Stable Diffusion, Midjourney, Copilot etc. Those are all great tools for both content creation and knowledge sharing. Every one of us will have to find a place for them in our daily toolbox. ChatGPT crossed 1 million users in less than a week—this is a clear sign that it’s not just a tool that will be forgotten soon.

The striking thing about the reaction to ChatGPT is not just the number of people who are blown away by it, but who they are. These are not people who get excited by every shiny new thing. Clearly something big is happening.

— Paul Graham, Computer Scientist, Entrepreneur, Venture Capitalist, and Author, Twitter

AI here to stay—and more will come. But together with the adoption of those tools into our daily toolboxes—we need to have a discussion about topics such as creativity, attribution, respect for human work and the effects those tools might have on the future of humanity and our knowledge.

#WrittenByHuman