Isolating Claude Code

Being a paranoid vibe coder means you don't trust Claude Code to not extract your secrets.

Recently, I have joined the vibe-coding club. I used to laugh and vibe-coders who use Claude Code while accepting everything it asks for, until eventually CC decides to delete their entire home directory, turning their computer into a brick. But, life has a funny way of turning around and coming back at you. Before this happens to me, I decided to take action.

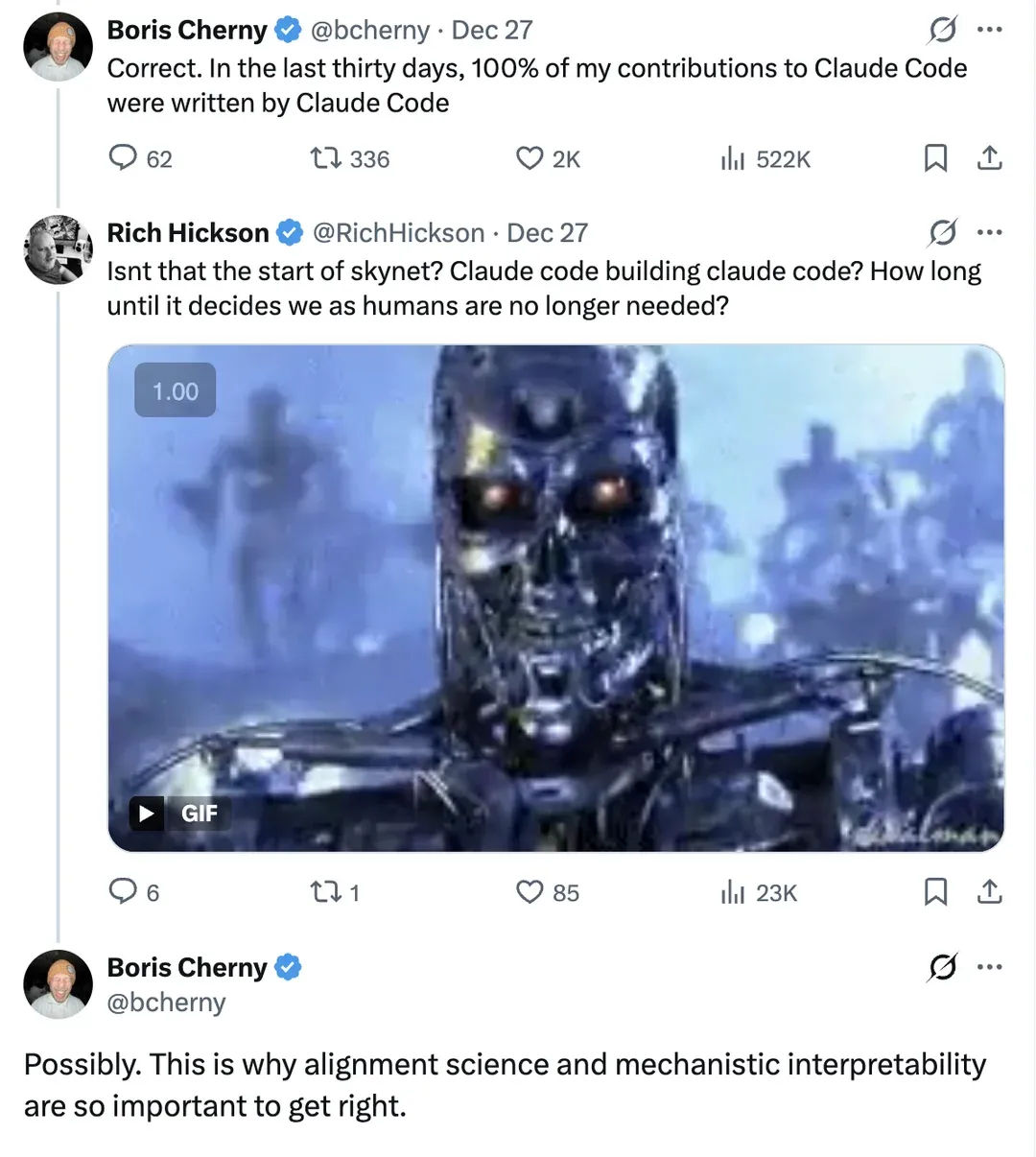

Moreover, according to the creator of Claude Code, Boris Cherny, Calude Code writes Claude Code.

And we all know that we can’t really trust AI. So let’s talk about isolating CC.

Built in Claude Code sandboxing

CC has built it sandboxing.

You can configure it with /sandbox inside CC chat.

You can read about CC sandboxing here.

Long story shorty, Claude is good at escaping the sandbox.

Eventually, I would find myself glancing at the output of CC and seeing something long the lines of:

permission denied to access db:5432

[CC] sandboxing is on, trying without sandboxI might have accepted a permission here and there that allowed CC to escape its sandbox, so I don’t trust the built-in sandboxing.

Existing tools

The first option I was thinking about was: let’s see if an existing tool is available.

I asked Claude to tell me how I can isolate it’s brother Code, and Claude was glad to help.

It came up with 3 tools: docker sandbox, cco, and claudebox.

I saw claudebox mentioned on HackerNews the other day, so I though I might be onto something.

How I work

I have adopted a workflow where I run all my auxiliary services in docker compose.

Every app / service that I develop, has its own docker-compose.dev.yml which brings all the needed services (database, redis, etc) up, so I can fully isolate versions, data, and accidentals fucks-ups.

Instead of installing all these tools on my host machine, trying to find workarounds for supporting different versions, I can just bring an entire stack for each app.

And when I’m done, I bring it down, and my machine is not wasting CPU cycles.

Or, if the project is no longer in development, I just nuke the entire docker container, with all its data, leaving my machine clean.

This means that if I run CC inside a docker container, this docker container needs to be able to communicate with my dev environment. I want CC to be able to run tests against the database, or even inspect the database directly.

docker sandbox does not support this.

You can --mount-docker-socket which basically means that the docker where CC runs has root-privileged access to the Docker daemon, which kind-of beats the purpose of isolation.

cco (which stand for “Claude Condom”, funny name), has:

Network access: Full host network access for localhost development servers, MCP servers, and web requests

This means that if my host has access to a Docker container named some-secret-app that CC should not access, cco won’t protect me.

Lastly, claudebox does not support joining an existing Docker network, hence I wasn’t able to find a way to give claudebox access to my auxiliary services.

Rolling my own

As an experienced software engineer, I know that the best practice is to always use existing solutions roll your own solution.

I need CC to access my development Docker network, so the reasonable thing to do, was to put CC in the same docker compose file.

Create a Dockerfile for claude:

FROM ruby:3.4.7

RUN apt-get update && apt-get install -y \

nodejs \

npm \

libvips-dev \

&& rm -rf /var/lib/apt/lists/*

RUN npm install -g @anthropic-ai/claude-code

WORKDIR /app

CMD ["bash"]I use ruby as the source image, since in this particular project it was for Ruby on Rails development.

Then, I added a service into my docker-compose.dev.yml file:

claude:

build:

context: .

dockerfile: Dockerfile.claude

stdin_open: true

tty: true

init: true

environment:

CLAUDE_CONFIG_DIR: "/claude"

DATABASE_URL: "postgresql://user:password@db:5432/db"

REDIS_URL: "redis://redis:6379/0"

TERM: xterm-256color

profiles:

- claude

volumes:

- .:/app

- ./.claude-credentials:/claudeIt took me some time to figure out how to persist authentication between container re-runs, and the key was to mount claude credentials, but also specify CLAUDE_CONFIG_DIR.

I also spent a lot of time trying to fix terminal issues, where key presses would not register, so it’s import to set tty, init, stdin_open and TERM.

Finally, I created a bash script to run the docker container:

#!/bin/bash

case "$1" in

continue)

docker compose -f docker-compose.dev.yml run --rm claude script -q -c "claude --continue" /dev/null

;;

*)

docker compose -f docker-compose.dev.yml run --rm claude script -q -c claude /dev/null

;;

esacUse ./claude.sh to start a new CC session, or ./claude.sh continue to resume an existing session.

Since the docker container is ad-hoc, and is removed after each run, this means you can run as many containers as you want.

This was it, and I thought I was happy.

But then, I started to question myself. Docker container shares the kernel with the host machine, meaning CC could still bring down my entire computer. Unlikely, but possible. But more importantly, I was running the dev server of my application on the host machine, rather than in the container. In general, I don’t like running my dev server in containers, since they overcomplicate stuff. I know some people do it, but for me, pulling, and rebuilding the container kills the entire DX of rapid development.

Since I was running the dev server on the host, and CC was editing the code in the container (which was a simple mount to the container), it is possible for CC to inject a code that would read an environment variable that was defined in my host machine, even though this variable is not available to the Docker container.

This malicious code can, for example, dump all the environment variables and send them to some obscure server.

Moreover, CC could still inject a variant of rf -rf ~ into the application code, which would still nuke my home directory, and turn my computer into a temporary brick.

And so, to mitigate these two issues, I had to go back to the drawing board.

Rolling my own, with blackjack and VMs

Doing your own “CC isolation” framework is a very common project many people undertake these days.

And so, while browsing HackerNews, I came across a blog post by Emil Burzo: Running Claude Code dangerously (safely).

And in this blog post, I saw something I haven’t seen for at least a decade: Vagrant.

I remember using Vagrant back in 2010s, when I was playing with VMs, as well as toying with writing my own Operating System Kernel.

Eventually, I switched to using qemu, but nevertheless, seeing Vagrant again has brought some warm memories.

And as Docker popularity peaked circa 2015-2016, Vagrant became less relevant, as container were faster, and lighter alternative.

Vagrant is basically “Virtual Machine as a code”.

Think terraform, but for VMs.

You write a Vagrantfile which describes your VM, and with commands like vagrant up you bring the VM alive, and vagrant ssh gives you ssh access to the machine.

Unlike Docker containers, Vagrant VMs are fully isolated, and they run their own kernel, meaning that there is very little chance for malicious code to gain access to the host OS.

Vagrant support various VM providers, but the most popular one is VirtualBox from oracle.

You define a VM by writing a Vagrantfile:

vm_name = File.basename(Dir.getwd)

NODE_VERSION = "24"

Vagrant.configure("2") do |config|

config.vm.box = "bento/ubuntu-24.04"

config.vm.synced_folder(".", "/vagrant", type: "virtualbox", SharedFoldersEnableSymlinksCreate: false)

config.vm.hostname = vm_name

config.vm.network("private_network", type: "dhcp")

config.vm.provider("virtualbox") do |vb|

vb.memory = "4096"

vb.cpus = 2

vb.gui = false

vb.name = vm_name

vb.customize(["modifyvm", :id, "--audio", "none"])

vb.customize(["modifyvm", :id, "--usb", "off"])

end

config.vm.provision("shell", inline: <<-SHELL)

export DEBIAN_FRONTEND=noninteractive

curl -fsSL https://deb.nodesource.com/setup_#{NODE_VERSION}.x | bash -

apt-get update

apt-get install -y avahi-daemon \

nodejs \

git \

unzip \

curl \

rustc

if ! command -v docker &> /dev/null; then

curl -fsSL https://get.docker.com | sh

fi

npm install -g @anthropic-ai/claude-code --no-audit

usermod -aG docker vagrant

SHELL

config.vm.provision("shell", run: "always", privileged: false, inline: <<-SHELL)

cd /vagrant

bundle install

npm i

docker compose -f docker-compose.dev.yml up -d

SHELL

endLike with Docker, I mount the working directory on the host machine to /vagrant on the guest machine.

I have a provision script that will run the first time I create the VM.

The provision script is responsible for installing the needed dependencies (docker, NodeJS, and Ruby on Rails dependencies which I omitted from this code as they are specific to this particular project).

I have another provision script that always runs when I bring the VM up, which installs the needed ruby and node dependencies for my project, and start the development docker (postgres and redis in my case).

As of writing this blog post, it seems like the installation process for Claude Code has switched to “native install”.

Instead of running npm i -g, you now need to run a shell script: curl -fsSL https://claude.ai/install.sh | bash and add ~/.local/bin to your $PATH like this echo 'export PATH="$HOME/.local/bin:$PATH"' >> /home/vagrant/.bashrc

So make sure to replace the npm line with these two lines.

After the machine is up, I can ssh into it using vagrant ssh, and start my app development server.

Then, I can open another ssh session, and start Claude Code.

I can have as many ssh sessions, and as many CCs as I need.

I can give CC full access to the VM, root permissions, whatever it needs, so it can install packages, run various tools, whatever.

It’s all inside the VM, and if the VM gets infected, I just destroy it, and bring a new one.

Nothing app related runs on my host machine.

The only thing that breaks the isolation level is the code which is mounted to the VM.

For truly paranoid people, you can specify type: "rsync", in the config.vm.synced_folder call, thus taking a copy of the code into the VM.

In that case, changes made in the VM are not synced back to the host machine.

You either need to sync manually, or setup git flow from within the VM.

Some people like to vibe-code 100%, so they don’t edit code manually, but instead let CC make the changes and open PRs.

I still write code manually, and use CC for mundane tasks or code reviews, so I want to be able to edit the code myself, and control when and how I push to git, therefor I prefer to go with a synced folder approach even at the cost of penetrating the isolation level.

Some of you might have noticed that I also install avahi-daemon on the guest VM.

What I wanted to achieve is that I will be able to open a browser on my host machine, and access the code that is running in the guest VM.

This can be achieved by forwarding ports from the guest to the host, just like with docker, using the network configuration in Vagrantfile:

config.vm.network("forwarded_port", guest: 3000, host: 3000, auto_correct: true)But I don’t like to expose too many ports, as well as being able to remember what port does each service use.

If you run a PostgreSQL Docker in every VM, you eventually end up with port mappings like 54321, 54322, 54323, and so-on.

I wanted to be able to access the machine by it’s name.

It is possible to setup a static IP to the VM, and then access the services by the IP, for examples 192.168.10.100:5432 for PostgreSQL, but then again, I’d have to memorize the IPs of every VM I run.

Another option is if you run a local DNS server (even PiHole or AdGuard Home), you can create a local DNS entry (or just edit /etc/hosts) and map a name to an IP.

But, with mDNS you can do better.

By running avahi on the guest machine, and properly setting the hostname (using config.vm.hostname = vm_name), you are able to “magically” access http://vm_name.local from your host machine.

Just make sure to bind your services to 0.0.0.0 instead of 127.0.0.1 when you start them.

Why you probably shouldn’t copy my approach

Someone recently asked on HackerNews, why so many people rolling out their own AI sandboxing solution, and I think the best answer was given by varshith17, which basically boils down to:

Same reason everyone rolled their own auth in 2010, the problem is simple enough to DIY badly, complex enough that no standard fits everyone.

My solution is tailored to my needs.

I run auxiliary services in docker containers, so I need a solution where CC can access these containers.

I don’t trust CC to access anything else, and I don’t trust myself to be able to correctly control permissions for CC after a long day of programming.

Eventually, I will slip and approve a request to Bash(rm -rf some-old-code ~/), hence rendering my machine unusable for some time.

My solution does not cover things like proxying requests to make sure CC can only access certain websites, or safeguarding environment variables, as I use only dev credentials that can barely cause any harm if they are leaked. If CC destroys my development database, I have a backup. Your needs might be different, and you might find existing tools, like the ones I mentioned earlier, useful. Or, you will decide to roll your own, and by having access to diverse information, and experience of others, you will craft a solution that suits your needs.

Safe vibe-coding!